Accordingly, the value of the max_concurrency attribute is ignored. If thread use is disabled, transfer concurrency does not occur. Thread use can be disabled by setting the use_threads attribute to False.

lastly the use_threads parameter determines whether transfer operations use threads to implement concurrency. the max_concurrency parameter determines the maximum number of concurrent S3 API transfer operations that should be used to upload each part. the multipart_chunksize parameter determines the size of each part during multipart upload. This can be changed in the config.py file. AWS recommends all files greater than 100MB be uploaded via multipart, so I have set this parameter to 100MB. the multipart_threshold parameter determines the minimum size of file that should be after which the file will be uploaded via multipart. We specify the configurations for TransferConfig as follows: config = TransferConfig(multipart_threshold=cfg.MULTIPART_THRESHOLD, multipart_chunksize=cfg.MULTIPART_CHUNKSIZE, max_concurrency=cfg.MAX_CONCURRENCY, use_threads=cfg.USER_THREADS ) It uses the TransferConfig class to handle the multipart upload. This method uploads the files to the specified s3 bucket. after all the files have been successfully upload we close all the connections using the close_connections(self) method.Ĭreate_s3_partition method s3_upload_file_multipart method. If files_to_move: for uploaded_file in files_to_move: self.move_files_to_processed(uploaded_file) else: print("nothing to upload") This is done in order to keep track of all the files that have been successfully uploaded to s3. On each successful upload the file gets moved to the processed directory using the move_files_to_processed() method that we created on the FTP server. If file size is greater than 100Mb files will be uploaded via multipart, otherwise as a standard file.įor ftp_file in files_to_upload: sftp_file_obj = self.sftp_client.file(ftp_file, mode='r') if self.s3_upload_file_multipart(sftp_file_obj, s3_partition+ftp_file): print('file uploaded to s3') files_to_move.append(ftp_file) Each file in the list is passed to the s3_upload_file_multipart() method. after this we start uploading the files one by one. year = / month = / day = / hour = /file I’m using the current date time to setup the partition structure as follows: create s3 partition structure using the create_s3_partition(self) method. get all the files that are to be uploaded to S3įiles_to_upload = self.sftp_client.listdir(). Self.sftp_client.chdir(self.ftp_directory_path) change current directory to parent directory. Once SFTP connection is established we do the following On successful connection, an SFTP connection is then created using the create_sftp_connection(self) method which we will use to access the FTP server. The ftp_obj then calls the initiate_ingestion(self) method which will first create an ssh connection with our FTP server using the create_ssh_connection(self) method. #FILEZILLA S3 PROTOCOL CODE#

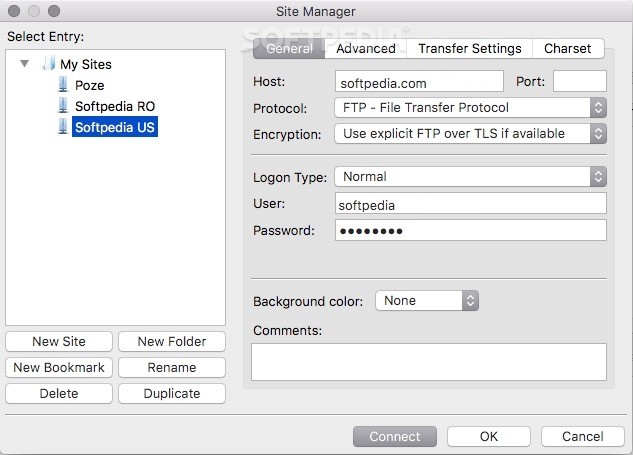

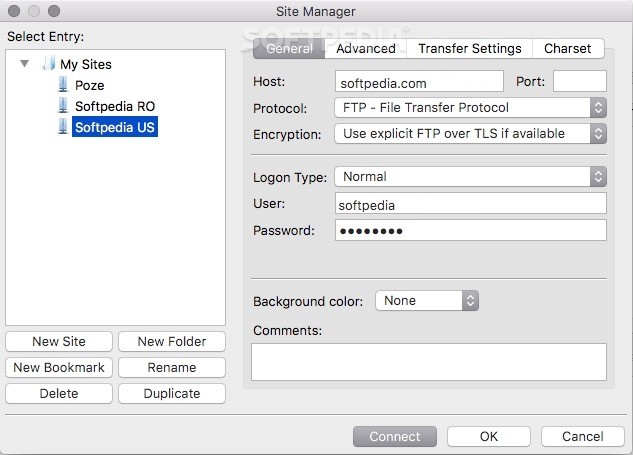

I will do a step by step walk through of each section of the code for better understanding. Can be used as a python job in AWS Glue to transfer files from FTP to S3 using AWS Glue.Partitions the data in s3 based on current year,month,day,hour.Automatically handles retires in case of failed parts uploads during multipart upload.Automatically handles single part and multipart upload depending upon the file size (multipart on 100MB or more).Creates a secure ssh SFTP connection with the File server using Paramiko.The file ingestion code does the following things: The file ingestion code can be found on my GitHub repository. In this article I will explain how we can transfer files present on an FTP server such as FileZilla to Amazon s3 using the Paramiko Library in Python. I have designed the code in such manner that it can be used in a managed(AWS Glue) or un-managed(local machine) environment to transfer files from an FTP server to AWS s3. Considering how important this step is, I developed an implementation in python that can be used to ingest files from an FTP server to AWS s3. We need a reliable, secure and fault tolerant method to bring our files from source(client FTP server) to our target( AWS S3).

When developing Datalake pipe lines, data ingestion is an important step in the entire process.

0 kommentar(er)

0 kommentar(er)